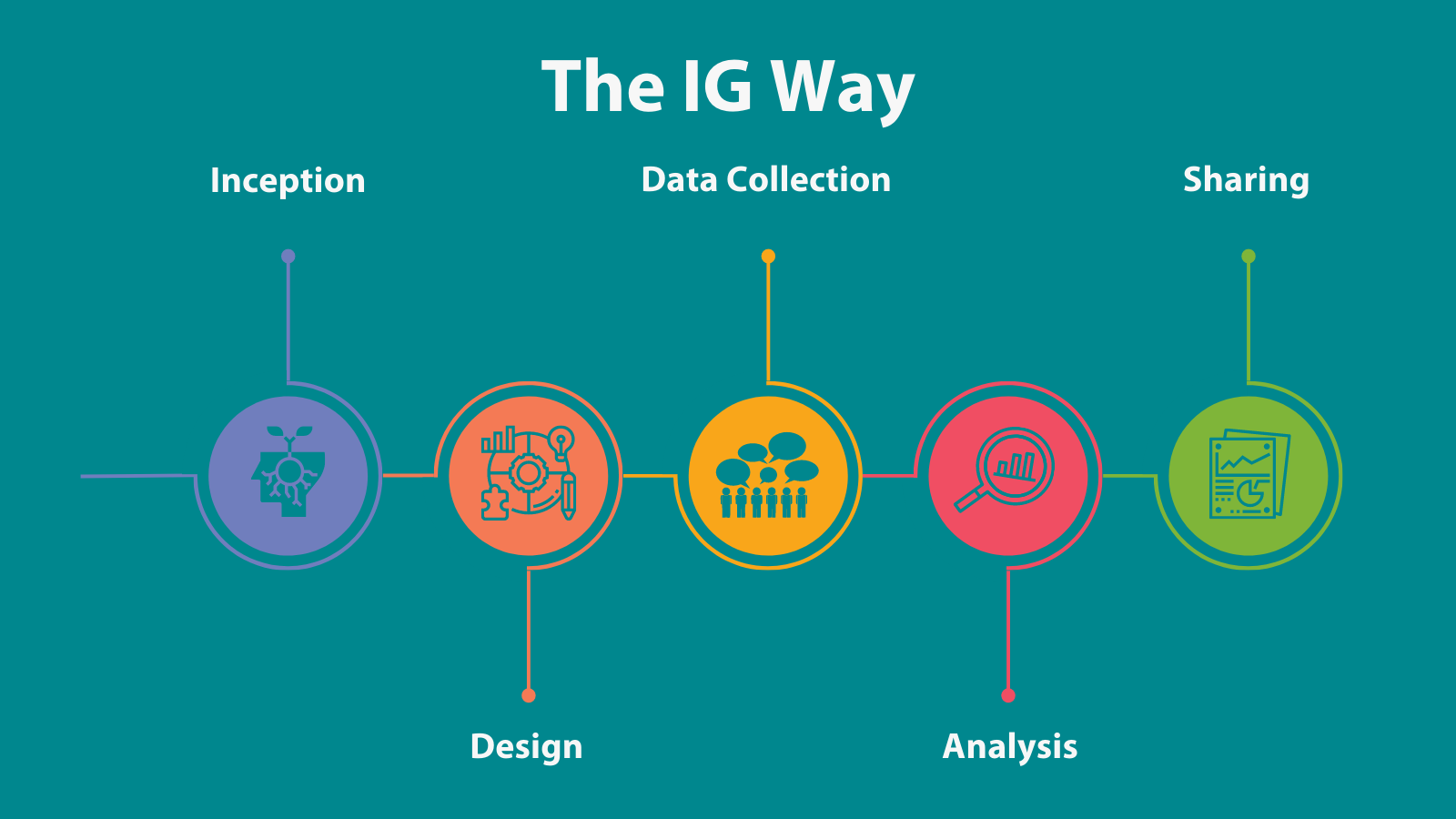

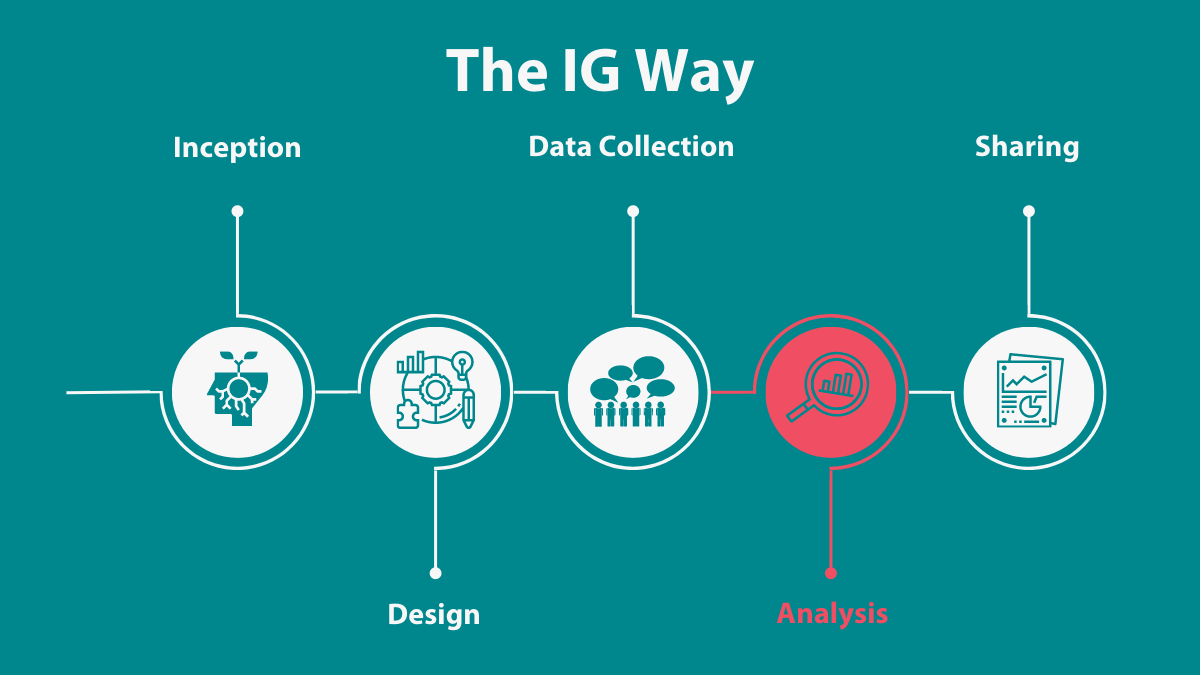

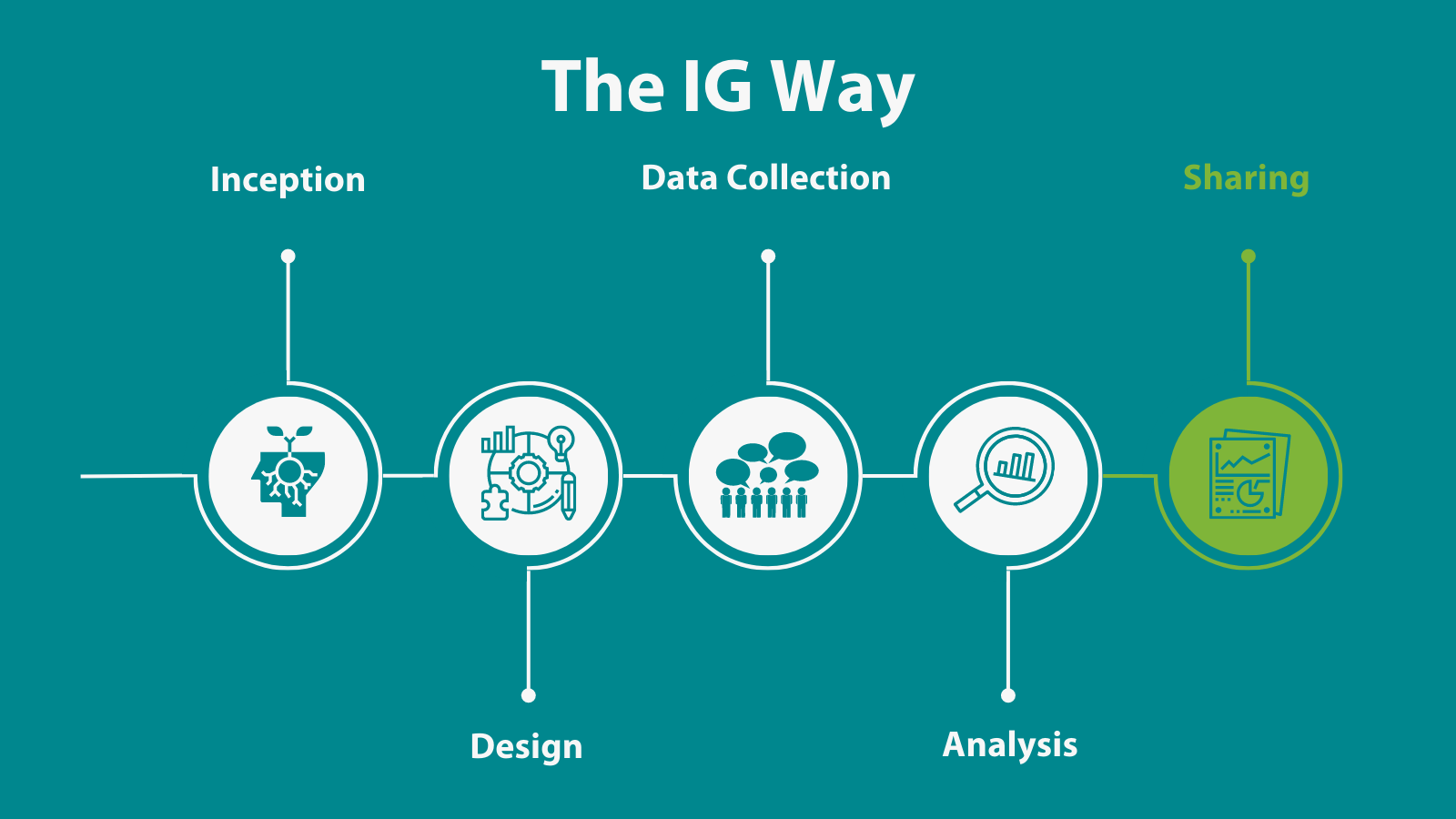

Over more than 20 years of working with partners and community members to conduct evaluation, The Improve Group has learned many lessons. In recent years we have collected key learnings into what we call the “IG Way.” You might notice us refer to IG Way when we refer to phases like inception, when we listen deeply about project context and needs, or to common IG Way practices like an emerging findings meeting, when we share initial reflections on evaluation data.

One of our biggest lessons is to keep adapting as we learn—so the IG Way is often changing. When a staff member finds a certain practice useful and effective, they might suggest it as an addition to the IG Way. These practices are documented and are a constant reference throughout an evaluation.

Particularly when it comes to practices intended to promote equity, we have found it helpful to reflect on IG Way activities—and to critically evaluate and improve them. Over the next few months, we will share some reflections on each IG Way phase, including lessons learned and key ways we consider equity throughout a project. Keep coming back to this thread for updates!

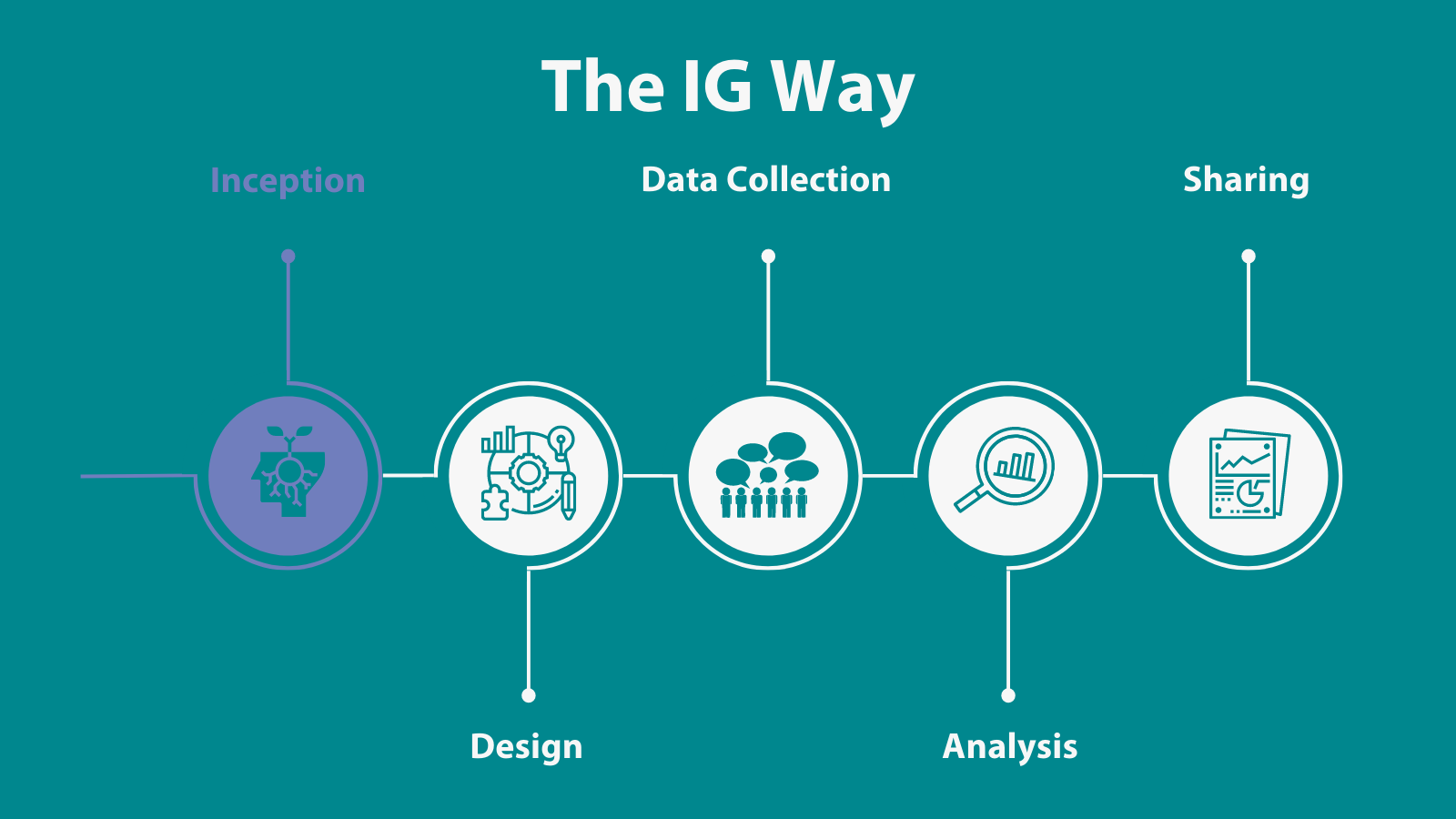

Taking time to listen and learn: equity in the Inception Phase

In the Inception Phase, we take time at the beginning of an evaluation to understand a project from different perspectives; begin building relationships; and finalize a work plan. What does equity look like at this point? A lot of listening and learning, to understand the context in which an evaluation is taking place, how client and community members’ lived experience can guide and inform our work, and where our evaluation expertise fits. We work to:

- Learn about the context and history of a community and their experience with the issues at hand.

- Continually learn, reflect, and be iterative to be responsive to what we are learning.

- Name and draw out assumptions and oppressive language/beliefs. For example, in our work with the Minnesota Department of Human Services, we are working to shift from using language based in historical institutional care to more person-centered terms that aren’t stigmatizing (like referring to people receiving services as “people” rather than “clients”).

- Scope the work to focus on important, real change, being clear about possibilities and limitations within the available budget.

- Frame issues, programs, and policies with a system lens, such as reporting what a city needs to do to better support people, rather than what people are lacking, in summarizing a community strengths and needs assessment.

- Identify assumptions about root causes in a program’s theory and framing.

- Plan to use mixed-methods, culturally appropriate, trauma-informed, participatory methods. For example, in conducting reference interviews for Bush Fellowship applicants, we asked references to define what leadership looks like in the candidate’s community so as not to reinforce a mainstream, traditional definition of leadership.

- Use asset-based framing.

- Ensure the evaluation purpose, choice of methods, and interpretation of findings are informed by the affected community.

- Include a focus on equity in evaluation questions, such as asking explicitly who is not benefiting who should benefit.

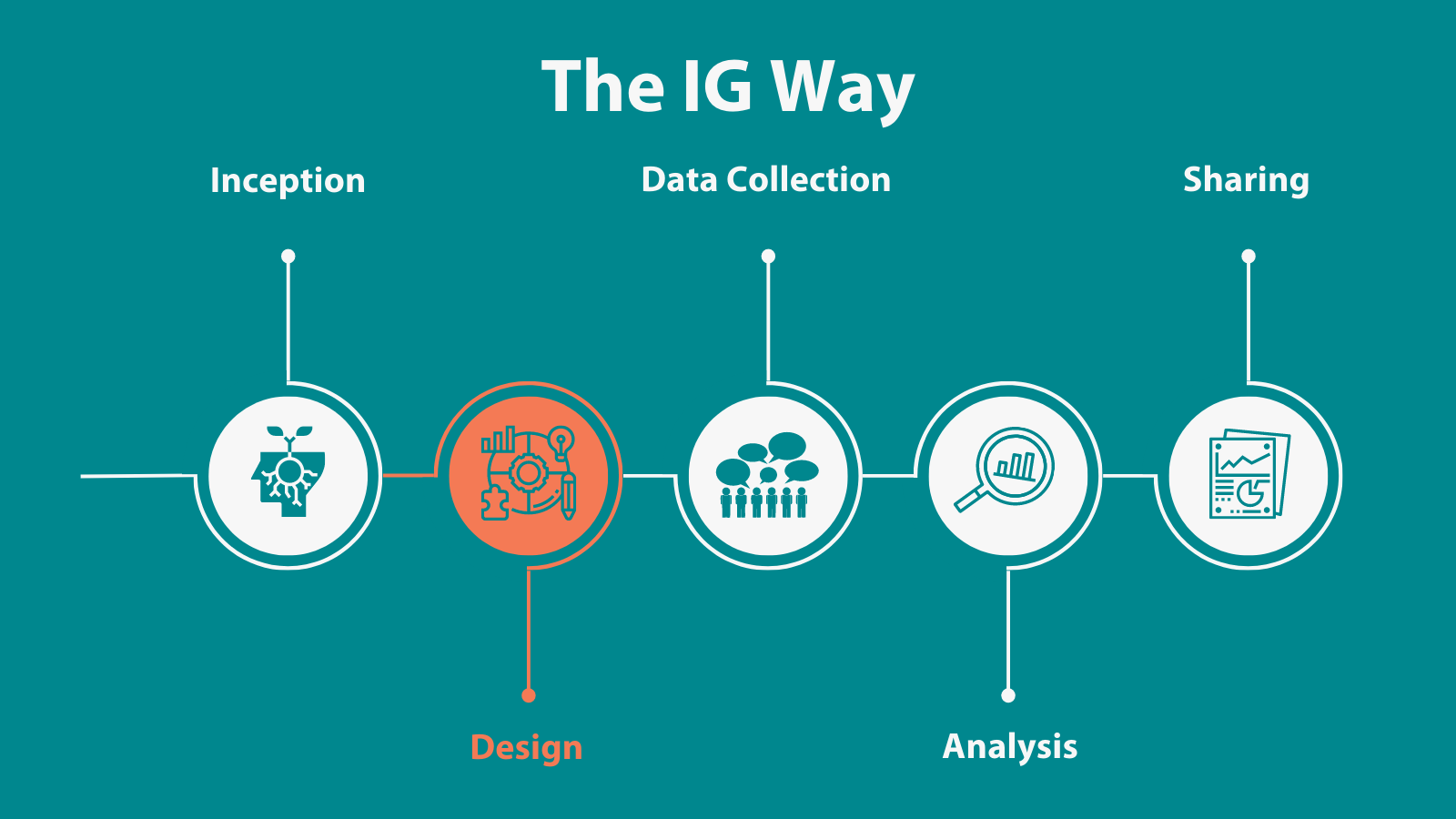

Balancing benefit and burden: equity in the Design Phase

Next, we’re talking about the Design Phase, when we build upon Inception Phase conversations to develop data collection tools and detailed plans for implementation. In these activities, we pay attention to what is accessible, important, and valid to the community members most affected by the evaluation. We work to:

- Designing data collection instruments to be responsive, relevant, and valid to those who are providing information.

- Maximizing benefit and minimizing burden, particularly among groups who are frequently surveyed. Benefits can include the positive change that could result from the evaluation, to new skills in data collection, to compensation/incentives.

- Using culturally appropriate and respectful language and symbols, pictures, colors, and imagery in outreach and tools.

- Continuing to use stakeholder guidance, such as through hiring community liaisons to support data collection. In our work on the Minnesota Youth in Transition Database survey, for example, we bring ideas to a statewide youth council for input on our outreach strategies and improvements for ways to engage youth.

- Offering thoughtful incentives. Only offering online gift cards can exclude people without internet access. We also consider whether gift cards, Visa cash cards, or actual cash is most appropriate, offering options when possible. We have also paid incentives through cash-sharing apps.

- Understanding analysis needs and carefully planning appropriate sampling. For example, through purposive sampling, we can gather more in-depth insights from particular community members rather than aiming for the breadth of a representative sample. With smaller sample sizes, it is important to work with our clients to clarify what can and cannot be learned from smaller groups—that the group is not statistically representative of entire communities, but that their insights may be very helpful nonetheless.

- Planning logistics of data collection to allow full, safe, and meaningful participation, such as providing child care or offering “asynchronous” data collection that allows people to contribute responses at a time they are available.

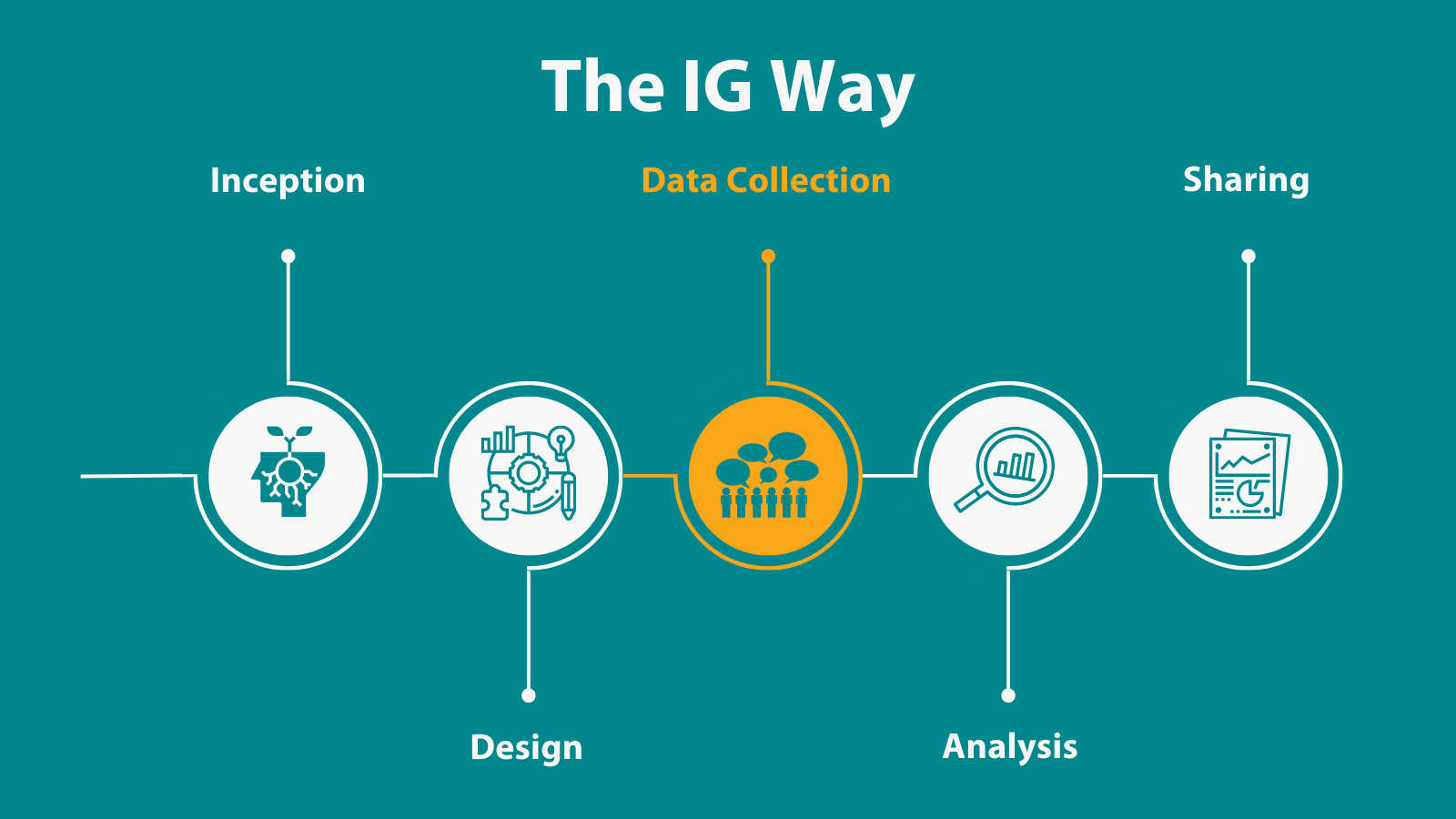

Adapting, iterating, and being community responsive: equity in the Data Collection Phase

In the Data Collection Phase, we collect stories, numbers, and perspectives based on the plans from earlier IG Way phases. At the same time, we intentionally pay attention for when we need to adapt and shift our plans in order to be responsive and meet goals. As you will see in the list below, this phase is as much about how we collect data as it is about what we collect. We work to:

- Ensure those inviting engagement and collecting data are culturally aware and appropriate. This can look like partnering with people from affected communities to ensure cultural responsiveness is built into our team. In a community strengths and needs assessment for Community Action Partnership of Hennepin County, for example, community partners made recommendations for data collection and led some data collection activities.

- Be transparent about the purpose, use, and security practices being used in an evaluation. This includes communicating appropriately for the audience—such as using plain language to talk about human subjects requirements so the important points do not get lost in the jargon and so people feel comfortable asking questions.

- Be iterative, curious, and humble, monitoring feedback during the process to make changes when goals are not met.

- Build in enough time for adaptation as appropriate. In another community strengths and needs assessment, we paused when we saw we weren’t getting enough survey responses from two groups. Our advisory committee connected us with community data collectors, who led in-home discussion circles and collected surveys, increasing participation.

- Provide space to attend to trauma and respect how it affects people’s engagement, including to minimize the potential for re-traumatization. In a project working to prevent substance abuse during pregnancy, we offered mental health resources for interviewees as we discussed sensitive subjects.

- Work with paid community members to gather and report on their own data when possible, maintaining data control and ownership within the community.

Engaging community in interpreting findings: Equity in the Analysis Phase

In the Analysis Phase, we work with stakeholders to translate collected data into meaningful recommendations and actions. We often spend time cleaning and organizing data to prepare it for quantitative or qualitative analysis. After our initial analysis, we bring the data and our initial interpretation to people affected by the evaluation.

We work to embed equity in the Analysis Phase by aspiring to the following practices in all our projects:

- Engaging stakeholders in interpreting findings through our “emerging findings” meetings. At these interactive meetings, we share our findings from analysis with clients, recipients of services, and others with a close understanding of what we are evaluating. They then become partners in analysis by adding their own interpretation and nuance to the analysis before we turn to finalizing our report.

- Disaggregating data by race, income, gender, disability status, etc., to identify disparities in outcomes, if group sizes are large enough to not be identifying. For example, in a community needs assessment for a mobile vaccine clinic, we disaggregated data by different demographic groups. This analysis supported what we were hearing in focus groups.

- Taking care to ensure interpretation focuses on closing inequities versus justifying problematic assumptions or program behaviors or blaming individuals for not thriving in systems. For example, where data show inequities in outcomes, we frame them as failures of systems to serve everyone equitably, rather than leaving room for harmful interpretations such as that individuals are to blame.

Framing findings with fairness: Equity in the Sharing Phase

In the Sharing Phase, we ground ourselves in earlier Inception and Design Phase conversations about the systemic and community context of a project. Rather than considering the report an afterthought, we use this phase to tie all our learnings together and present them in a way to prompt actions toward equity. To help us do this we have named these practices:

- Carry through systems-focused framing and contextualization as with earlier phases to avoid potentially harmful misinterpretation. For example, in the final report of our Minnesota child welfare caseload and workload study for the Department of Human Services, we described the systemic factors that have led to a disproportionate number of American Indian/Alaska Native and African American/Black children in the child welfare system.

- Present recommendations in a way to facilitate reflection, action, planning, and implementation. We often pull in insights that stakeholders shared in the interpretation process to pair recommendations for action with key findings.

- Share findings in a community-friendly way. For example, in our evaluation of the Colorado Health Foundation’s Supporting Healthy Minds & Youth Resiliency program, we shared short summaries of workshop notes with all grantees to be transparent. We asked for reflection on the document among grantees at subsequent meetings to ensure we captured the notes appropriately. In another project, we produced a podcast about results to reach people who may not read a written report.

- Include definitions of terms like “structural racism” and “white privilege” to be clear about what we mean and how these issues relate to the evaluation.

- Write in a plain, direct, and accessible way, for example, using active voice instead of passive voice to be clear about who is responsible for an action.

- Seek feedback from client and project participants, including the affected community, about how well we did in making the project relevant, respectful, and valid. As part of this final phase of an evaluation, we hold internal and external “closing meetings” for reflecting with our team, clients, and partners about how we did.

Close-out

This concludes our series on Equity in the IG Way. Thank you so much for staying tuned. We hope that these reflections are helpful to you in your practice of becoming a more equitable evaluator. If you have other ideas or reflections, please don't hesitate to reach out to us at info@theimprovegroup.com! As a learning organization, we continue to reflect on our practice and how we can promote equity across our work. And we will share our learnings with you when we have more to share.